What Is Edge Computing?

Edge computing in autonomous vehicles is a modern data processing method where computation happens near the data source. Unlike cloud computing, which sends data to distant servers, edge computing processes information locally—at the ‘edge’ of the network. This ensures faster responses, reduced latency, and better bandwidth efficiency. In autonomous vehicles, where split-second decisions are crucial, edge computing allows real-time reaction to sensor data without relying on remote cloud servers.

Why Edge Computing Matters for Autonomous Vehicles

Autonomous cars (AVs) depend on real-time analysis and instantaneous decision-making. Such decisions involve braking, changing lanes, dodging obstacles, and reacting to the presence of traffic signs. Each millisecond even counts. Cloud computing creates a lag because data takes some time to reach the cloud and return. Edge computing removes this lag by bringing computation nearer to the car’s internal systems.

Secondly, edge computing decreases the dependence on continuous internet connectivity. Autonomous vehicles will not always enjoy high-speed networks, particularly in rural locations or tunnels. The car can operate smoothly with edge AI, even in no-connectivity or low-connectivity areas.

A second advantage is enhanced data privacy. With data being processed locally, it minimizes the risk of sending sensitive passenger or location information over the internet, which improves privacy and cybersecurity.

Sensors, Data & Decisions: What Happens Inside a Self-Driving Car

Several sensors on an autonomous vehicle continuously stream vast amounts of data. These are:

- LiDAR: Detects distances with laser light and builds up a 3D environment representation.

- Radar: Picking up objects and their velocity.

- Cameras: Take images and video for visual processing.

- Ultrasonic Sensors: Assist with near-range object detection.

These sensors collectively generate terabytes of data per hour. The real-time processing of this data is paramount to its safety. Edge computing enables the vehicle to instantly process sensor input and make critical decisions such as accelerating, braking, or evading.

For example, When a pedestrian immediately crosses the car’s path, sensors alert, and edge computing allows for instant braking—potentially saving lives. Depending on cloud processing in such a case can be hazardous because of even the minimum delay.

Edge AI vs Cloud AI: Which Works Best for Vehicles?

Both edge AI and cloud AI have their strengths, but they are applied to different uses in the case of autonomous driving.

- Cloud AI excels at heavy data analysis, deep learning model training, or running complex simulations. It, however, calls for high bandwidth and reliable connectivity.

- Edge AI, however, is designed for real-time processing on-site. It lets AVs operate autonomously, process sensor data instantly, and react to the surroundings without lag.

A hybrid strategy is best in practice. Model training, for instance, can occur in the cloud, while model inference (real-time decision-making) occurs on edge devices onboard the automobile.

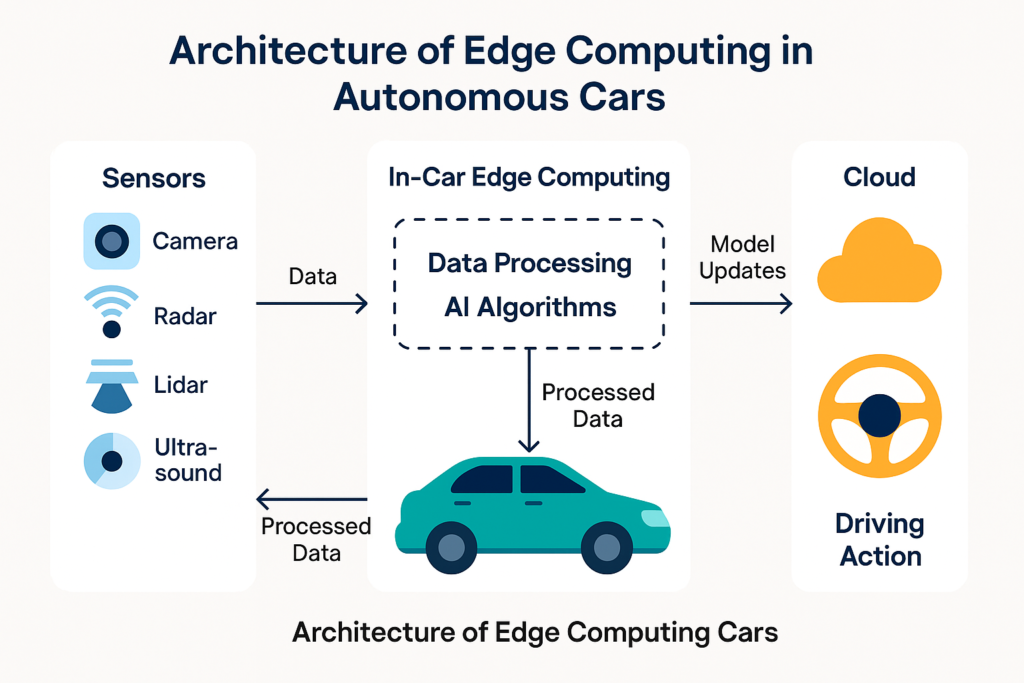

Architecture of Edge Computing in Autonomous Cars

Knowledge of the architecture explains how autonomous driving is enabled by edge computing:

Hardware Components

- Onboard Computing Units: GPUs and CPUs such as NVIDIA Xavier or Intel Atom optimized for quick, low-power AI computation.

- Sensor Arrays: Cameras, LiDAR, radar, and ultrasonic sensors gather data about the environment.

- Edge Gateways: Hardware that gathers and processes sensor data before sending it to the central processor.

Software Stack

- Real-Time Operating Systems (RTOS): Provide low-latency processing.

- AI Frameworks Such as TensorFlow Lite, NVIDIA Jetson, or Intel OpenVINO are employed to execute AI models.

- Middleware: Controls data routing, sensor synchronization, and fault management.

Real-Time Processing Flow

- Sensors pick up objects, road surfaces, and signs.

- Data is passed on to the onboard processor in real-time.

- Pre-processing removes noise or irrelevant data.

- AI algorithms process the pre-processed input.

- Decisions are applied immediately (braking, accelerating, turning).

This whole loop occurs in fractions of a second, and it’s vital for safety.

Top Applications of Edge AI in Autonomous Driving

Edge computing enables a broad spectrum of mission-critical capabilities:

- Object Detection & Avoidance: Detects neighboring cars, pedestrians, and other roadblocks to avoid crashes.

- Lane Keeping & Navigation: Maintains the vehicle at the center of its lane and plots the most direct course.

- Traffic Sign & Signal Recognition: Reads and interprets road signs and traffic signals.

- Driver Monitoring: Tracks driver engagement in semi-autonomous vehicles.

- Real-Time Mapping: Builds and rebuilds 3D maps in real-time with sensor information.

These functions must be processed in real-time to provide safety and comfort.

Case Studies: Real-World Edge AI in Use Today

Let us examine companies already implementing edge computing in autonomous vehicles today:

- Tesla: Tesla’s vehicles employ onboard AI chips to make crucial decisions without the support of the cloud. This allows them to run in real-time.

- NVIDIA Drive: Supplies a complete AV platform with edge computing modules to support complex AI tasks on the edge.

- Mobileye by Intel: Supplies vision-based edge computing solutions for numerous car manufacturers, such as collision avoidance and lane detection.

- Waymo: Employing a mix of edge and cloud to enable autonomous taxis, it prioritizes meeting real-time and large-scale learning requirements.

These examples demonstrate that edge AI is not an idea – it’s already being implemented in real-world applications with tremendous success.

Challenges in Implementing Edge AI for Vehicles

While the benefits are apparent, there are several challenges:

- Security Risks: Edge devices are openly accessible physically and thus are more vulnerable to hacking or manipulation.

- Power Consumption: Powerful processors require proper cooling and battery life.

- Hardware Costs: Producing small, light, but potent devices is expensive.

- Standardization: No international standards for applying edge computing to the AV sector make creating it more challenging.

- Data Management: It is a delicate balancing act determining what data to process on the edge and what data to push to the cloud.

Despite all these issues, relentless innovations are being made to make edge computing safer, more efficient, and economical.

What’s Next? The Future of Edge AI in Self-Driving Cars

The evolution of edge AI in AVs is just getting started. Some trends to watch out for are:

- 5G Integration: Enables faster vehicle and edge/cloud infrastructure communication, further reducing latency.

- Federated Learning: Allows vehicles to learn from shared data without sharing raw data, further enhancing privacy.

- Custom AI Chips: More and more businesses are developing chips for edge inference, optimizing speed and efficiency.

- Energy-Efficient Hardware: Future edge devices will consume less energy while delivering enhanced performance.

- Software Updates Over-the-Air (OTA): Vehicles will be remotely updated with the latest AI capabilities without a trip to the service center.

These innovations will further cement edge computing as the foundation of autonomous mobility.

Is Edge the Driving Force of Autonomy?

Edge computing is transforming the way autonomous cars operate. Real-time data analysis and decision-making within the vehicle ensure safety, speed, and independence from outside networks. It allows AVs to act promptly, drive through complex conditions, and respond to sudden changes without lag.

While hardware continues to advance and AI models improve, edge computing will be the driving force behind the autonomous revolution. It is no longer a decision for developers, engineers, and mobility companies—they must embrace edge technology.

In a world racing toward total autonomy, technologies like edge computing—and real-time platforms in DSL autonomous cars—are not just part of the future, they are the future.